Installing Photon

It’s fairly easy to install Photon all you have to do is clone the Github repository from here, install the dependencies, and run the script.

git clone https://github.com/s0md3v/photon.git

Installing Dependencies

Installing dependencies in Python is pretty easy, most developers place a requirements.txt file along with their package which has a list of all the dependencies with the specific version being used in the script. So time to CD into the cloned repository and install the dependencies.

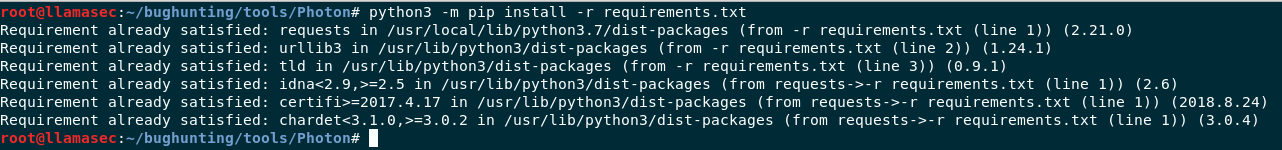

cd Photon python3 -m pip install -r requirements.txt

Here we call the python3 binary and run the module pip which is a package manager for Python through command line. Later we pass the flag -r to PIP for passing a list of requirements in it. PIP will go line by line and install all the packages required. I already have the packages installed so this is what I get, your output may differ.

Photon in action

Now that are environment is all setup it’s time to startup Photon.

python3 photon.py

If everything went well this is the output you’ll get.

As you can see Photon offers a lot of options for you to play with. You can crawl a single website, clone the website, set link depth, specify user agents, and obviously a Lot more.

Crawling a Website

We’re going to start simple and crawl a single website right now.

python3 photon.py -u "https://llamasec.tk"

Note - Do NOT run your scans on llamasec unless authorized to do so.

The output gets stored in llamasec directory with a file called internal.txt. There isn’t much there but then again the website is quite static. Let’s try something which is more dynamic with extra set of options this time.

Photon with Options

Tesla has an open bug bounty program so we’re going to scan them for now.

python3 photon.py -u "https://www.tesla.com/" -l 1 -t 10 -o sorryElon --dns

Here we ask Photon to scan Tesla, with a dept scan level 1 and 10 threads, also we’re specifying the output directory to sorryElon, with DNS mapping as well.

This time Photon will scan for multiple javascript files and store it in the output along with a list of all the subdomains associated with that domain. One thing to note here is that the scan timing was pretty quick and much better than other tools. S0md3v also went the extra mile to add a visual DNS map which is a great touch.